Young Voices for Fair AI: Research That Connects

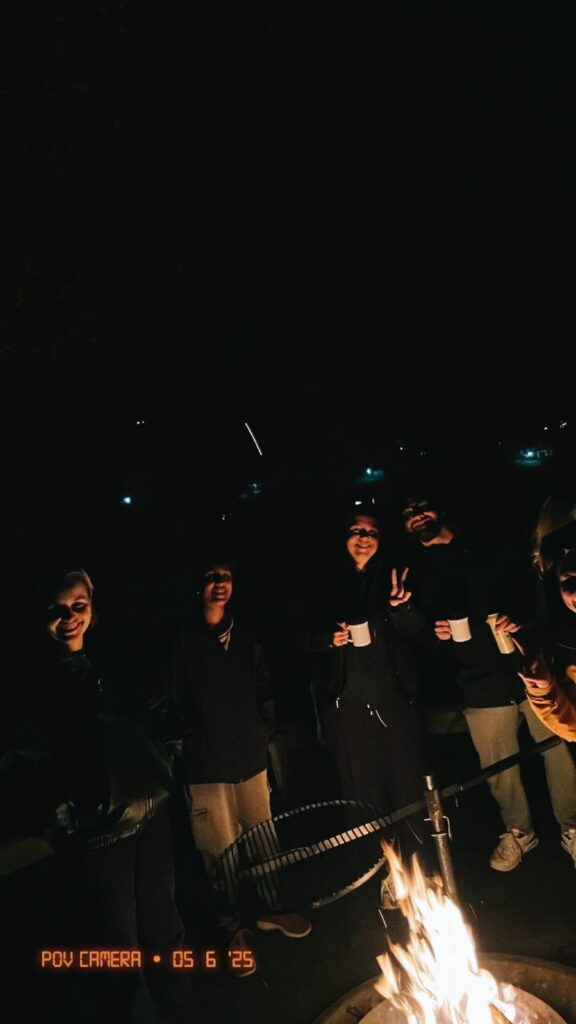

What happens when young researchers tackle bias in language models – not in a conference room, but while hiking, cooking, and discussing in the mountains? The first edition of KnitTogether showed: Research needs trust, exchange, and space for new perspectives.

Fog, rain, snow. And in the middle of it: 25 young researchers from across Europe spending a week exploring societal biases in AI systems.

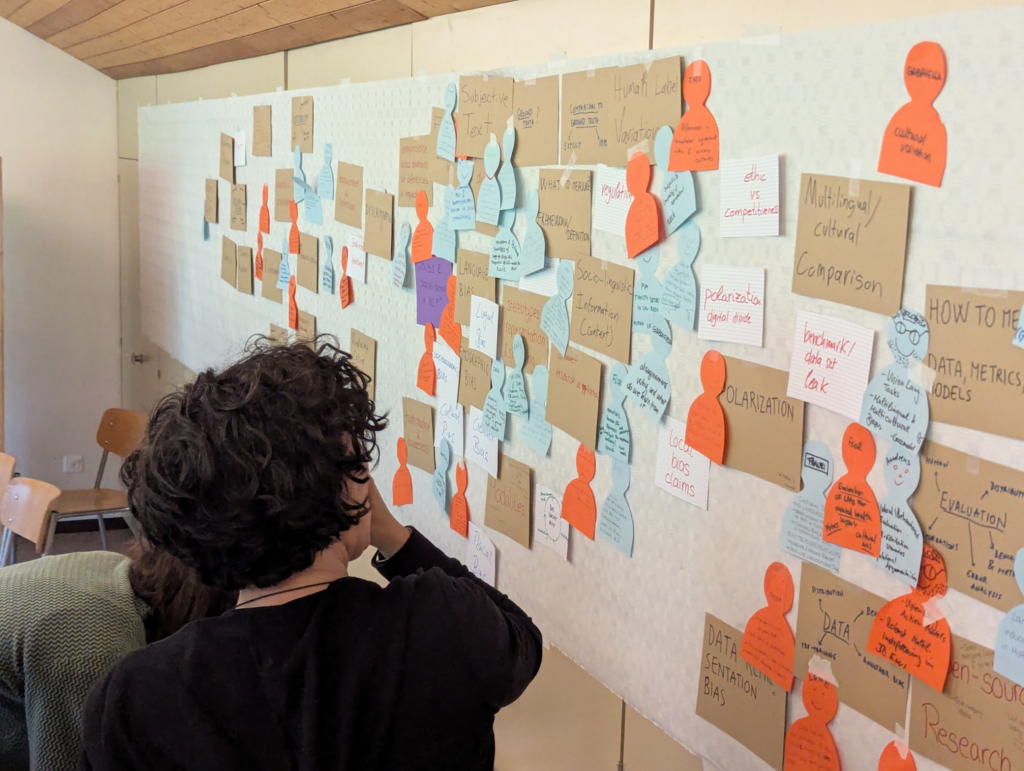

Instead of conference routines, the program was shaped by workshops, personal conversations, and shared activities.

KnitTogether – A Research Week in the Mountains

KnitTogether is a format for early-career researchers that fosters exchange on socially relevant questions related to artificial intelligence. The first edition took place in May 2025 in the Appenzell village of Wildhaus – organized by Lucerne University of Applied Sciences and Arts, the University of Stuttgart, and the University of Hamburg.

At its core was the topic of bias in language models – societal distortions that can be reflected in AI systems, such as gender inequality. Twenty-five participants from across Europe discussed how fair and transparent modeling can be achieved.

Instead of lectures, the program featured workshops, peer reviews, mini-talks – along with shared cooking, games, and hiking. This made the exchange not only productive but also personal and connecting.

The week was made possible thanks to the support of Lucerne University of Applied Sciences and Arts, the University of Stuttgart, the University of Hamburg, and the Society for Language Technology and Computational Linguistics.

Three Takeaways from a Week of Pressure-Free Research

1. Trust before Data

Good research begins with relationships: with open exchange, shared experiences, and the freedom to show vulnerability. KnitTogether made clear how essential safe spaces are – especially for young researchers often caught between pressure and high expectations. Many participants used this protected environment to figure out what they truly want to focus on in their research and where they want to grow personally.

2. AI Needs Critical Awareness

At the center of the week was the topic of bias in NLP (Natural Language Processing) – biases in language models that reflect or even reinforce societal inequalities. Whether it’s gender-neutral language, cultural stereotypes, or the dominance of English: These distortions are not merely technical errors; they reflect societal power structures embedded in the training data. And these structures are constantly changing. The participants agreed: Research must account for this – and above all, make bias visible so users can engage with these models responsibly and confidently.

Making Bias in AI Visible Means Taking Responsibility

Language models are based on massive amounts of text and in the process also adopt societal biases present in these training data. These biases can relate to gender, origin, language, or social role models. They are not merely technical side effects, but reflections of societal power structures – and can be further amplified by AI systems.

In this context, transparency means:

- Communicating biases in the model openly and transparently

- Documenting data sources and design decisions

- Enabling users to critically reflect on the models

Technically, this can include:

Model Cards: A kind of product label for language models that outlines data sources, limitations, and known biases.

Bias Metrics: Tests such as the Gender Bias Score can quantify distortions.

Debiasing Methods: Balancing training data or training models in a way that they respond less biased.

3. Lightness Enables Depth

Whether hiking, cooking, or through formats like a simulated conference: The week demonstrated what can emerge when science allows space for playfulness. In an interactive game, participants experienced the full research process – from idea generation through methodology and data collection to abstract and peer review. What felt like play nurtured creative thinking and honest exchange.

Research Needs Space and Resonance

This research week highlighted the importance of creating relaxed and accessible formats that allow scientific exchange. Especially during a PhD, the pressure to succeed is immense, and often only others’ results are visible.

An honest exchange – even about the difficult things – feels like balm for the soul.

Andreas Waldis

This makes it all the more valuable to have spaces where doubts, questions, and insecurities are welcome. For many PhD students, honest conversations – especially about challenges – are deeply reassuring.

KnitTogether wasn’t just a productive week in the mountains – it was an impulse for a new research culture. We hope it won’t remain the first, but the first of many.

Voices from the Week

Ana Baric

PhD student

University of Zagreb

What was your highlight of the week?

Ana Baric: That’s hard to say because the whole week was so productive and fun. If I had to pick, my personal highlight was the conference simulation game. From the treasure hunt for research funding to simulating research results and the brilliant evening social event –I loved it all. And of course, the shared cooking – that’s something I’ll remember for a long time.

What did you take away—professionally or personally?

The discussions opened up new perspectives. It was refreshing to view familiar problems through so many different lenses. I returned home with renewed motivation – and it’s still with me today.

What makes this format so valuable?

It’s accessible and productive at the same time. Without context, someone might think we were doing children’s activities – but it’s precisely that playful nature that allowed us to talk about science at our own pace, without fear of judgment.

Anne Lauscher

Professor

University of Hamburg

What was your highlight of the week?

Anne Lauscher: I was thrilled to meet so many new, smart people. It was great to reconnect with old colleagues. I was especially impressed by the simulated conference work. The researchers delivered high-quality results and showed how easily and joyfully ideas can emerge when the environment is right.

What did you take away – professionally or personally?

I was reminded how individual the challenges of PhD students are. Creating a safe and motivating research environment is crucial.

Research thrives on community – on people who care about similar topics and are willing to engage in respectful debate.

Anne Lauscher

Why are formats like KnitTogether important?

Research thrives on community and people passionate about similar topics. But traditional formats like conferences often come with a lot of pressure. Especially for doctoral candidates, it’s important to have protected spaces where they can connect and grow—free from academic performance pressure.

Participants of the KnitTogether week gathered—among them co-organizer Andreas Waldis (bottom right in the picture)

Andreas Waldis

Andreas Waldis is Senior Research Associate at the Department of Computer Science at Lucerne University of Applied Sciences and Arts. He researches fairness and transparency in AI systems – focusing on language models and how to better understand them to enable broad societal benefit. His current work includes gender-fair language, the emergence of toxic content in language models, and how such models can contribute to digital democracy. He co-organized the first KnitTogether-week and co-authored this report from the organizing team’s perspective.

Published: June 24, 2025

Subscribe to the Computer Science Blog:

In this blog, you’ll learn more about trends from the world of computer science. We offer insights into our department and stories about IT pioneers, visionaries, and inspiring individuals.

Subscribe to our blog here.

New: Updates from our department now on LinkedIn. Follow us now.

Kommentare

0 Kommentare

Danke für Ihren Kommentar, wir prüfen dies gerne.